Capacity Management is concerned with ensuring that the capacity of the infrastructure is sufficient to meet business requirements in a timely and cost effective manner. To achieve this, the Capacity management compares business-led requirements against measurements of the available capacity of a service. The results of this activity are then used to drive forward the changes needed to ensure that capacity continues to be aligned to need.

Capacity Management Activities

Capacity management involves five activities which can be summarised as follows:

Business Capacity Management

Services are assessed to reflect new, updated or forecast business requirements or to analyse any demand for changes in capacity proposed by the overall Capacity management process. This assessment needs to establish the required balance between requirement and cost in line with overall business strategies.

Service Capacity Management

The business requirement is translated into a service plan that establishes the capacity needed to achieve that requirement, to meet any Service Level Agreements and possibly to meet the relevant demands of Availability and Continuity Management.

Resource Capacity Management

The resources available to the service, including those specifically acquired as part of the service design, are assessed to ensure that they meet the requirement. This assessment will take into account any excess capacity elsewhere in the infrastructure.

Where the available capacity is insufficient or does not exist, a revised requirement is passed back to the Business Capacity Management process for analysis and approval.

Capacity Database Management

A database of current and historical resource, performance and availability information is maintained to permit accurate reporting of capacity against need for each service.

Produce Capacity Reports

Derived from the information in the database, three types of report are produced:

- Tuning and Improvement reports for existing services

- Details of excess or under-utilised capacity available for reuse elsewhere

- Exception reports showing where current capacity has been exceeded, or is approaching safe limits and requires attention

All these Capacity Management activities are closely interdependent, with the results of each being used to drive the others in a controlled manner. In essence, business needs are used to develop system solutions, which are satisfied by resources identified by component reporting. Equally, deficiencies are reported to resource capacity management from capacity reporting within the process, and externally via generated incident records and changes.

The process as a whole delivers its reports via the Capacity Management Plan. This is a strategic document that relates business need to available capacity, proposes suitable medium-term solutions to forecast requirements and identifies areas of under-utilised capacity that may be of use elsewhere.

Capacity Management Input/Output Model

Capacity Management Desired End State

Capacity management should ideally be a proactive exercise in continuous optimisation of the entire IT infrastructure, with the desired end goals being:

- Full visibility of capacity availability and utilisation across the entire enterprise

- No capacity-related service degradations or outages

- IT spend kept to a minimum by ensuring optimal utilisation of existing assets

Clearly, these goals are difficult to obtain, but key to getting as close to them as possible is the acceptance that Capacity management has to be taken seriously and resourced accordingly, and that as soon as there is a capacity related issue to deal with, it is already too late!

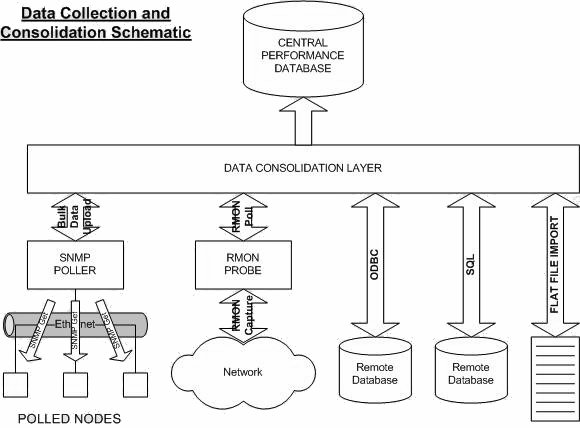

Key to all of this is the ability to collect performance and capacity data from all of the components that make up the IT infrastructure, and to consolidate this data into a centralised repository. This allows reporting via a single toolset and interface, and facilitates data export to other tools by providing a consistent format for data.

As well as “basic” performance data analysis of the kind described above, Capacity management requires the ability to perform more sophisticated “what if“ analyses and projections. This may involve development of complex models, which essentially require the ability to construct mathematical simulations of the system in question, such that variables can be manipulated and the effect on other parts of the model observed.

Capacity considerations should be addressed as early in the lifecycle of IT projects as possible. For example, if a proposed system design requires a level of wide area network bandwidth between clients and server far above what that is currently available, the additional cost of providing this may be large enough to warrant a rethink in the proposed design. And if the project is able to fund require capacity upgrades, it is important that these are fully in before the system goes live.

In order to facilitate this, a centralised capacity management function should be implemented, which, as well as the resources and tools required to collect, analyse and forecast and report upon performance data, has a clearly defined and mandatory process framework within which to operate. This will help ensure that capacity considerations are addressed in a timely manner and that there are no nasty surprises when systems go live.

Capacity Management Tool Requirements

Data Collection

Invariably, agent technologies used to support exception-based monitoring and alerting afford the ability to extract performance data from the instrumented platform. Generally, this is done either by logging data to a local data store, which is subsequently exported to a central repository and reporting tool, or by direct query of the agent by a centralised poling engine. Polling engines typically use SNMP for this purpose, as SNMP is supported by the majority of agent technologies. The other standards-based means of polling for data is via RMON (Remote MONitoring). RMON probes analyse every data frame traversing the network segment upon which they reside, and record detailed traffic analysis statistics such as per-protocol utilisation, conversation pairs, top talkers etc. These data are periodically uploaded to a remote data collector for centralised storage and reporting.

As well as direct polling, data collection can be effected via other interfaces such as .csv file import, SQL, BCP, ODBC and so on. The key is that it should be possible to incorporate performance data from any source.

The impact of polling for large volumes of data on the network must also be considered. The Capacity management tool should not itself be the cause of performance problems. Key to this is the ability to specify precisely which data are required when polling remote agents for data, thereby minimising the data which has to traverse network links.

Where data are to be polled from systems the other end of (relatively) low-bandwidth WAN links, distributed polling allows the consumption of this scarce resource to be kept to a minimum.

The polling server is situated on the same LAN as the polled systems, and performs initial aggregation of collected data into the require format for upload into the central performance database. The overhead of transferring this data over the WAN link is lower than that associated with polling itself, and can also be performed “out of hours”, at times when there is less use of the WAN link by other applications.

Reporting

Once data has been collected and consolidated into a central repository, a reporting interface is required to present that data. Typically, the reporting interface should allow some degree of data manipulation to be carried out as part of the report generation process. Reports are constructed via a report development utility, and then run against the performance database either on an ad-hoc or a scheduled basis. Invariably, the medium used for report presentation is the web, with reports accessible via a performance reporting website.

Data Management

Clearly, the volumes of data collected when an entire infrastructure is fully instrumented for performance purposes and all components are routinely reported upon is going to be large, so the ability to effectively manage this data is also important. At its simplest, this involves specifying a retention period after which data should be deleted to ensure that the performance database does not fill up.

However, a slightly more sophisticated means of performance data management is based on the rule of thumb that less detail or granularity is required the further back in time you go. For example, server CPU utilisation may be collected at 5-minute intervals, and reporting at this level of granularity for the previous 24 hours may well be useful. However, how CPU utilisation varied across 5 minute samples three months ago is likely to be more detail than is necessary, with an hourly average likely to be sufficient for longer-term historical analysis. So, data are averaged or “rolled up” as they age, based on a policy that can be defined by the application administrator depending on the amount of space available and the requirements of the organisation and capacity management team in question.

Proactive Notification

Another design aim of the Capacity management solution is that it should be able to compare performance data collected over time against thresholds or policies defined by the Capacity management team, and to provide an automatic notification that a threshold or policy has been breached. In some respect this is similar to the monitoring of a performance metric by an individual monitoring agent, but when done centrally by the Capacity management tool this is generally applied to more than one monitored system or a combination of metrics from different systems.

Data Export

The ability to export data for further or more detailed analysis by other applications is also required, and generally needs to be supported via the same kind of interfaces discussed earlier in relation to bulk data import.

Capacity Modelling Tool Requirements

As stated earlier, capacity modeling is the more complex analysis and presentation of the collected capacity and performance data. Capacity modeling applications may or may not have the ability to collect those data, and for the purposes of this discussion it is assumed that data are collected and held in a database by a separate application.

Data Import

Data import from remote repositories should be achievable via the standard open interfaces prevalent in today’s IT industry, i.e. SQL, ODBC, JDBC and XML. Additionally, the ability to pre-format and import flat file data should be supported to allow maximum flexibility.

Construction of Models

Mathematical models representative of the environment being analysed should be supported using standard statistical analysis methods such as queuing theory and regression analysis. Some applications supply pre-built or “canned” models representative of certain types of hardware, software or more complex distributed systems, and these can be extremely valuable in removing some or all of the requirement to build models by hand.

Data Visualisation

A reporting interface to view the data is required. This should support online, ad hoc and scheduled automatic report generation.

Data Export

Once analyses and calculations have been performed, it may be desirable to export the derived data back to the central repository allowing reporting and presentation via the main reporting site. Data export should therefore be supported via the same open interfaces mentioned earlier for data import.